To celebrate National Inclusion Week, we thought we would share how GDS enables inclusion through digital accessibility.

Digital accessibility is an important part of inclusion: many users change their settings to make websites and apps easier to use, such as increasing the font size, zooming in, or navigating with a keyboard instead of a mouse. Some people use assistive technologies, such as screen readers, voice recognition or Braille displays to access the Internet. Digital accessibility means that everyone can perceive, understand and use websites and mobile apps.

Public sector websites and mobile apps have to be accessible under the Public Sector Bodies Accessibility Regulations. This means that everyone, including both the general public and public sector employees and workers, can expect a good level of accessibility.

The Central Digital and Data Office (CDDO) and the Government Digital Service (GDS) are responsible for the accessibility regulations. The accessibility monitoring team, based in GDS, audits public sector websites, and works with public sector organisations to fix accessibility issues.

A day in the life of accessibility monitoring

Most of the team are accessibility specialists and officers, who carry out accessibility testing, write reports, and contact the public sector organisations to help them fix issues.

Simplified tests check for common issues

Keeley is an accessibility officer in the monitoring team:

“In order to send an organisation their report, we need to complete a simplified test. This includes testing a few different web pages, including a form and a document, both manually and using an automated tool.

“We have a range of issues that we manually look for in our simplified tests. These include keyboard, resize and reflow issues. This means checking the website still functions using just the keyboard as well as in different screen settings, for example, at 200% zoom.

“We also run Axe on each webpage to help identify more issues which the users may not initially identify. For example, if a link is not accessible using a screen reader, this will be identified. Using Axe helps us provide an explanation of how to fix the existing issues.

“Once the report has been sent, we work closely with each organisation to support them in fixing any issues and making changes to their accessibility statement.”

Detailed tests cover all criteria

Andrew is an accessibility specialist in the monitoring team:

“For a small number of websites and mobile apps, we run detailed accessibility tests to see if they meet the full range of accessibility criteria. This might mean reporting a missed bin collection using a screen reader, booking a journey without using a mouse or watching an organisation's promotional video to check it has captions or audio descriptions.

“You can usually get an idea of how accessible a site is, and how much people with disabilities have been considered when building it, with a few tab presses and a quick automated check.

“Although our job is reporting on accessibility issues, we'd rather not find any. Luckily, most organisations respond positively to our audits and make a real effort to remove the barriers we’ve found.”

What a test looks like

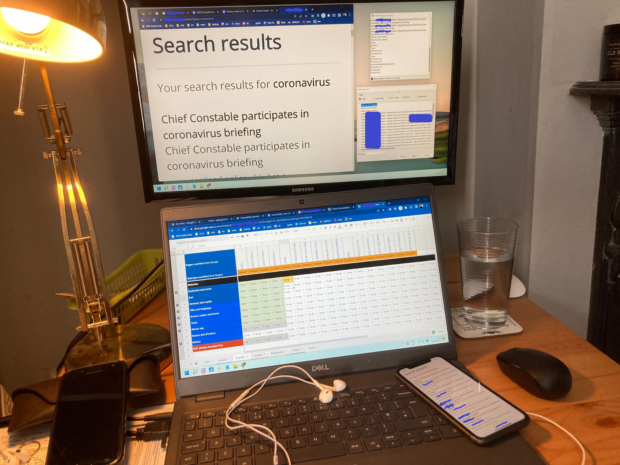

Here’s what a typical test setup looks like. For our detailed tests we use a Windows laptop. Here, the monitor shows a web page window open at 400% zoom, and screen reader windows open to one side. On the laptop, there’s a spreadsheet to track test progress, with headphones attached to listen to the screen reader. There’s also a mobile phone showing the same web page.

Two years on from the regulations

It’s now been two years since the accessibility regulations applied to most public sector websites. We are starting to retest some websites previously monitored to check that they remain accessible.

It’s important that the public sector keeps its websites accessible as the sites are added to and changed, and that accessibility statements are reviewed regularly and are kept up-to-date.

Find out more about what the accessibility monitoring and reporting team has been up to in our end of year report.

2 comments

Comment by Regine posted on

Hi, where do you encode the description of the component that has an issue and the remediation in your spreadsheet ? Is it only a strict compliance audit with an overall percentage of non compliant success criteria ?

Comment by jessicaeley posted on

Hi Regine - please see the accessibility monitoring team's response below:

Hi, we use a different process for our simplified and detailed tests. For simplified, we log failures and retests on a custom platform. For detailed, we log them in a spreadsheet. In both cases, we'll log the page an issue was found on, the success criteria which failed and a description of the issue.

Our main focus is on the organisation's response to the issues we have raised, and updates to their accessibility statement, rather than scoring the audit as a percentage of failed criteria. There's general information on our monitoring processes here: https://www.gov.uk/guidance/public-sector-website-and-mobile-application-accessibility-monitoring