As part of the Government Digital Service’s (GDS) mission to make digital government accessible for everyone, the GDS accessibility monitoring team has been testing UK public sector mobile applications (apps). This helps us check if they are likely to work well for people with cognitive, hearing, motor and visual disabilities. Apps have a legal requirement to meet the Web Content Accessibility Guidelines (WCAG) along with websites and it’s our role to monitor this.

This blog covers how we’ve applied WCAG to mobile, the challenges we’ve faced, and a recent case study.

How WCAG applies to mobile

Under the Public Sector Bodies (Websites and Mobile Applications) (No. 2) Accessibility Regulations 2018, public sector mobile apps for the public need to be accessible. This means they must meet the WCAG AA criteria. For example:

- links, buttons and form fields must be correctly labelled and work with assistive technology

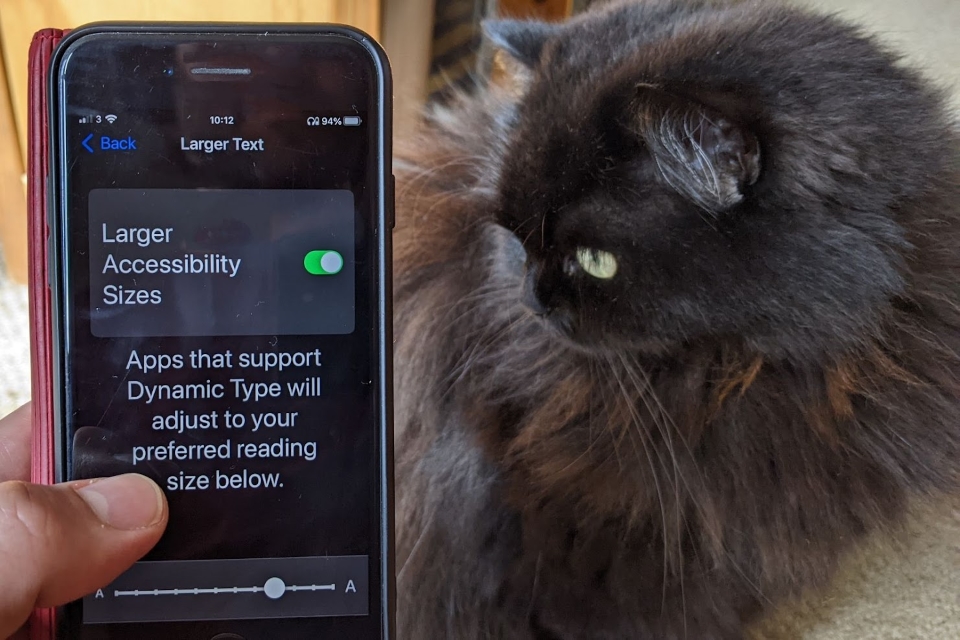

- apps must support large text sizes

- the app must meet other requirements such as those for contrast, media and error handling

The main challenge we face is that most of the current criteria were published as part of WCAG 2.0 in 2008, before mobile apps were in widespread use. This means that it was primarily written for the web.

There have been some more recent updates to help with testing apps. In 2018, WCAG 2.1 added mobile-specific criteria, such as the need for content to work in both portrait and landscape mode, on a small screen, and without relying on complex gestures. And WCAG 2.2 has just been published, which adds more checks relevant to mobile (such as Target Size and Dragging Movements).

Despite these updates, the main backbone is largely unchanged. So we have been working out how best to meaningfully apply WCAG to mobile apps. The biggest challenges are as follows:

Keyboard support

WCAG has a number of criteria which help people who use a keyboard to navigate content.

We believe that keyboard support is a good indicator of general support for assistive technologies. For example:

- there is a correlation between whether an app supports keyboards and whether it supports other input methods, for example switch control - this is another common way of accessing sites without a pointer

- if an interactive component such as a link, button or form field does not support keyboard access, it is a likely indication that its role has not been coded correctly

- if an interactive component is visually hidden but reachable with a keyboard, it is a likely indication that its state has been incorrectly coded

- if keyboard focus is in an illogical order, this helps to identify problems with the wider order of the content in general

When we test, we use an external Bluetooth keyboard in a similar way as we would on a website with some tweaks, as follows:

- we navigate mainly using the tab key but with a greater focus on the arrow keys, which can also be used to focus on an element

- buttons and links are usually activated using Enter or Space

- it’s sometimes possible to go back a screen using Alt + Backspace

No access to the code

When we test an app, we do not have access to the code, which makes it harder to check things like titles, headings, images and form controls.

A side effect is that we cannot easily run tools which help to highlight certain accessibility features of a site (for example, tools which show the alternative text of images, or the keyboard focus order).

This means that compared to websites, we spend more time testing apps using the phone’s inbuilt screen reader (VoiceOver on iOS, TalkBack on Android) to help identify how each element is presented to people who use assistive technology.

Language

WCAG has two criteria around setting a language, but in our testing, we have struggled to get a native app to respond to language changes - for example on screens with text in English and Welsh.

Criteria designed for web pages

There are two further requirements where we believe you should use judgement when testing them on a mobile app.

2.4.2 Page Titled requires that web pages have titles that describe topic or purpose. However, some mobile app screens might have a single image or line of text, for example as part of an onboarding process. In such cases, it may not be appropriate to add a title.

2.4.5 Multiple Ways requires that there’s more than one way to get to each web page on a site. However, many mobile apps have a very simple structure (for example, five functions all linked to from a home screen), so providing an extra means to access each screen might be unnecessary and unhelpful.

Inconsistencies between native and web content

An app might contain both native content (built specifically for the device) and web content. When testing an app, it might not be obvious which is which, but here are some differences which sometimes occur when testing:

- web content might have its own means to resize text, independent of the operating system

- keyboard support can vary significantly

- some items, such as buttons and text fields, only appear in the VoiceOver rotor in web views

Other criteria

Other specific criteria are difficult to test with a mobile as there is no common functionality for it. These are 1.4.12 Text Spacing and 4.1.1 Parsing (although Parsing has now been deprecated with the publication of WCAG 2.2).

How we’ve learnt

Before we started testing mobile apps, we had several really useful sessions with the HM Revenue & Customs (HMRC) team while their app was being tested with disabled people. This helped to inform our early approach.

We’ve also taken cues from training courses, accessibility communities and various online sources.

We’ve continued learning as we’ve gone along. Where we’ve been unsure on a particular failure, we’ve used internal surgery sessions with other accessibility specialists to discuss them and agree on a consensus. In each test, we have been able to identify clear access barriers and enable them to be fixed as part of our monitoring process.

Case study

The Taxicard app is used by disabled people to book subsidised taxi travel across London. The scheme is operated by London Councils and the app is built and maintained on their behalf by ComCab London, a private company. In 2022, we were made aware of possible accessibility issues within the app.

This caused us to test the app and validate that there were indeed accessibility issues at the time. Also, the app was in the process of moving to a new platform so we needed to test both the old and new versions of the app.

After documenting the issues we found and sending a report, the ComCab team responded and started to fix the issues, publishing incremental updates on each app store and asking us for clarification where needed. We had a single point of contact who was able to track each update and keep us informed.

Since the original test, the following have significantly improved:

- buttons now have appropriate labels for assistive technology users

- icons and images now have appropriate alternative text

- text and symbols now have sufficient contrast across the app

- the app now supports increased text sizes

- error messages are now described using text

In addition, ComCab have published a compliant accessibility statement which can be accessed from within the app, the London Councils website and their own site.

In the past, we have seen public sector organisations sometimes struggling to gain a commitment from third party companies working on behalf of the public sector. So it was really refreshing to see a private organisation working hard to fix the issues we had raised, improving the experience for disabled people using the app.

Mobile testing guidance

We’re currently working on publishing our test guidance for mobile apps and look forward to sharing it more widely.

We’re not the only people who have tried to map accessibility standards - for example, Appt (a non-profit organisation based in the Netherlands) have recently published their App Evaluation Methodology in English. We’ve found their guidance really helpful but have come to one or two different conclusions over how much WCAG applies to apps.

In the meantime, we would love to promote further discussion on mobile app accessibility across government.

Join an accessibility community to continue the discussion.

4 comments

Comment by Rachael Yomtoob posted on

Thank you for this article! Mapping WCAG to mobile apps is indeed a challenge, so seeing this approach is really affirming.

Comment by Teknik Telekomunikasi posted on

What guidance is GDS developing for mobile app testing, and how does it align with or differ from other existing methodologies, such as the App Evaluation Methodology published by Appt?

Comment by amywallis posted on

Hi Teknik, thanks for your message.

The report author has provided the following response:

We're in the process of publishing advice on how to test each Web Content Accessibility Guideline success criterion on mobile apps as opposed to websites. The main difference with Appt is that their methodology is based on the European EN 301549 standard which states that some success criteria are out of scope (for example, WCAG 3.2.4 Consistent Identification). We use WCAG as the underlying standard, so we assume that everything applies by default. Apart from that we largely agree with their methodology.

Comment by Chris McMeeking posted on

Another interesting one is Text Scaling!